Donald Trump and Zuckerberg's Matrix

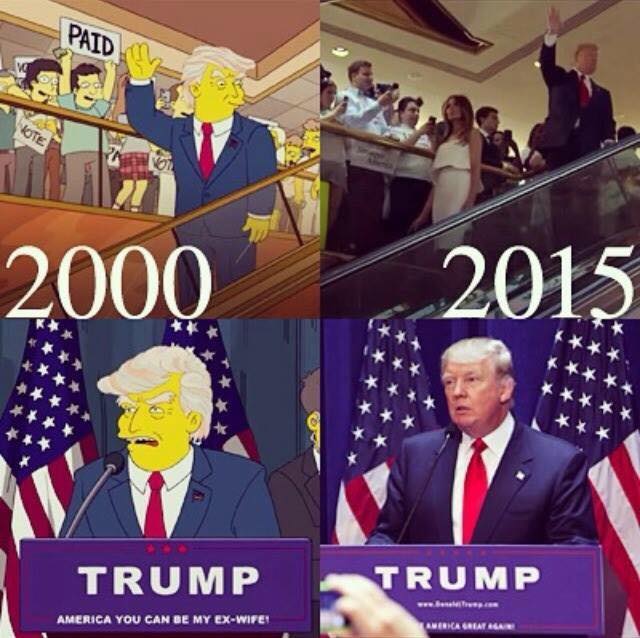

Prediction markets have the Republican nomination for President of the USA going to billionaire reality TV host and megalomaniac Donald Trump. On the other side of the aisle, self-described socialist Sanders is making Hillary Clinton’s campaign sweat. This isn’t “politics as usual” for anyone except the writers of The Simpsons.

According to the party decides theory (or at least my interpretation of Nate Silver’s interpretation) the party, as a loose coalition of opinion forming individuals, can heavily weigh in on who gets nominated. Yet they took so long to settle on Rubio that Trump is now the likeliest candidate. Perhaps this is a sign of the GOP’s weakness, or perhaps they hate Cruz more than they hate Trump. These are all strong arguments that have been well argued elsewhere, but I would like to present an alternative one: the party just isn’t as effective at forming opinions as it used to be, because social media has created a pervasive platform for radicalisation.

According to the party decides theory (or at least my interpretation of Nate Silver’s interpretation) the party, as a loose coalition of opinion forming individuals, can heavily weigh in on who gets nominated. Yet they took so long to settle on Rubio that Trump is now the likeliest candidate. Perhaps this is a sign of the GOP’s weakness, or perhaps they hate Cruz more than they hate Trump. These are all strong arguments that have been well argued elsewhere, but I would like to present an alternative one: the party just isn’t as effective at forming opinions as it used to be, because social media has created a pervasive platform for radicalisation.

The downfall of traditional media has been written about extensively. And in this election more than ever, Facebook is playing a key role across a wide range of demographic groups. The majority of Americans use Facebook as a news source. The editor of your Facebook Newsfeed is one personally tailored just for you, and this editor carefully selects articles based on what you engage with. For the most part, the news it selects is news you agree with. This plays to well-known psychological biases and Facebook has hundreds of clever people working on algorithms to cater to them. This isn’t surprising in itself: if the algorithms weren’t doing the filtering, you would yourself. In a sense, not much has changed: the majority of left wing voters don’t read right wing newspapers, and viceversa.

The difference is the pervasiveness and social element in social media. The dynamics of Facebook, with its selective Newsfeed algorithms and the fact that people with radical beliefs like and share more content, produce echo chambers, where people share the same ideas and the same arguments. Again, echo chambers themselves are not new phenomena, just look at Fox News (or your least favourite newspaper). What is new however, is that many more people are being exposed to them for much longer. Millions of individuals start their days looking at Facebook and finish their day likewise. Most left-wing readers do not read right-wing newspapers, but only radicals read party propaganda all day, every day. I hypothesize that Facebook’s Newsfeed makes it is likelier you start thinking echo chamber views are actually widespread. If an acquaintance keeps sharing information that shows vaccines are a global conspiracy, you’re more likely to express any skepticism regarding vaccines than if you think it is unacceptable to do so. Effectively, the internet helps marginalised communities by helping people find likeminded individuals and giving them a platform to express their views. A gay boy in the Bible belt will find it easier to find similar people and talk about his experiences. So will eugenicists, flat-earth believers and paedophiles.

Facebook, in conjunction with your opinionated buddies, can make the unthinkable thinkable by removing stigma associated with certain opinions (or viceversa). If you often see articles in your newsfeed about “rape-culture” you’ll have the intuition that it is one of the biggest concerns people have. You might be shocked and perhaps offended when you overhear a casual misogynistic comment because for you it’s a big deal and you’ve read, commented and liked loads of articles on the objectification on women and related topics. It’s a big transgression for you, but for many people, it just isn’t such a big deal. Similarly, people who hate immigrants may be astounded by the Willkommenskultur of others. If we believe in an objective reality, it only natural that we assume that others have roughly the same sources of information that we do. Facebook may be further inflating our already misguided estimates of the extent to which others agree with us. The less diverse our social network, the greater the danger our views will shift considerably from the majority’s without us even noticing. I know educated people who sincerely believe Sanders will be nominated.

So is Facebook responsible for the rise of radicals? A convincing counterargument is that more than anything it seems like the traditional media is going crazy for Trump. Nonetheless, it is an empirically testable hypothesis: radical candidates should be more popular amongst people who use Facebook as a primary news source. I was not able to find any data where I could confirm or deny this hypothesis. Yet, regardless of whether it is wrong or not (I suspect the answer is “it depends”, but even a couple of percentage points of influence can write history), it still raises three important questions.

The first question is related to agency: who supervises the editor robots? If you read an inflammatory article or see a controversial TV show you can easily find out who the editor is and who owns the channel. It remains without saying that the reason you saw it is because you chose to flick on that particular channel or buy that particular newspaper. If I read an article in the Economist, it is because the author wrote it, the editor accepted it and I went and bought the magazine. In theory, journalists have a professional code of conduct. Newsfeed algorithms do not. Moreover, the fact that they are algorithms makes it even more difficult to assess the question of responsibility, because they are a blackbox nobody really understands: data went in, results came out. They have no professional responsibility to show you a balanced view on the world and it’s partly your fault for watching too many cat videos. It’s not implausible to consider that systemic biases in how they work may even influence elections.

Secondly, we should ever more aware that he who controls our perception of reality controls reality. Zuckerberg, as a good student of psychology, no doubt knows this. He is in fact very excited about how perception can be manipulated with gadgets such as the Occulus Rift (as I am). I have no reason to think his intentions are evil, and I was lucky to have a glimpse of his desk in Facebook’s HQ, and I didn’t see any world conquest plans. But we should be well aware of Lord Acton’s famous quip about absolute power. I used to think Chinese banning Facebook as a dangerous American weapon was ludicrous. After Snowden’s revelations, I am not so sure.

Third, what can we do to prevent ourselves from being uncannily influenced by our biases of reality? My previous post reviewing Superforecasters gives a few hints.

Third, what can we do to prevent ourselves from being uncannily influenced by our biases of reality? My previous post reviewing Superforecasters gives a few hints.